ce_timing.html

1. Measure the digout command using logic analyser. Used t4_app to do some DIGIOUT command between

a parallel sequence output.

CE_PARAM_UPDWR_LO + CE_CMD_UPDWR

reps cd.n time each.

---------------------------------

0 12 9.08

1 14 9.12 .020

2 16 9.23 .037

3 18 9.31 .038

4 20 9.43 .043

5 22 9.47 .039

10 32 9.87 .039

100 212 17.07 .039

CE_PARAM_UPDWR_HI + CE_PARAM_UPDWR_LO + CE_CMD_UPDWR

----------------------------------------------------

0 12 9.07

1 15 9.18 .036

2 18 9.29 .036

3 21 9.40 .036

4 24 9.51 .036

5 27 9.62 .036

10 42 10.18. .037

100 312 20.07 .037

Hmmm.... takes longer but, is doint HI+LO+UPDWR is quicker per operation?

How about just CE_CMD_UPDWR

----------------------------------------------------

0 12 9.04

5 17 9.32 .056

10 22 9.58 .054

100 112 14.07 .050

200 212 19.07 .050

So..

CE_CMD_UPDWR 100 took 5.03 : 0.050 usec each

LO+UPDWR 100 took 7.99 : 0.030 usec each

HI+O+UPDWR 100 took 11.00 : 0.030 usec each

2. Testing al_ck_ce_cmd_delay()

Using tick() to time delays:

CMD_DELAY with 9999 ITER takes: 1000106 usec

CMD_DELAY with 19999 ITER takes: 2000206 usec (what is wrong).

t2 1000 = 0.001 s - CMD_DELAY | 9 = 0.001001

t2 5000 = 0.005 s - CMD_DELAY | 49 = 0.005001

t2 100000 = 0.1 s - CMD_DELAY | 999 = 0.100011

t2 500000 = 0.5 s - CMD_DELAY | 4999 = 0.500051

t2 1000000 = 1 s - CMD_DELAY | 9999 = 1.000101

t2 5000000 = 5 s - CMD_DELAY | 19999,19999,9999 = 5.000501

t2 10000000 = 10 s - CMD_DELAY | 19999 x 4 = 10.001001

CMD_DELAY time is about 1.0001 times the requested time.

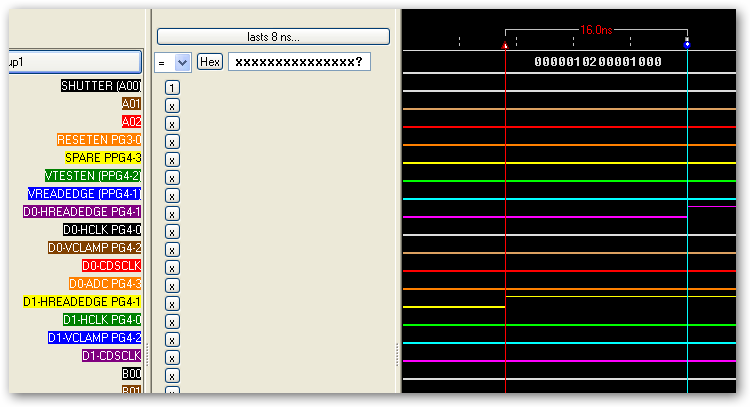

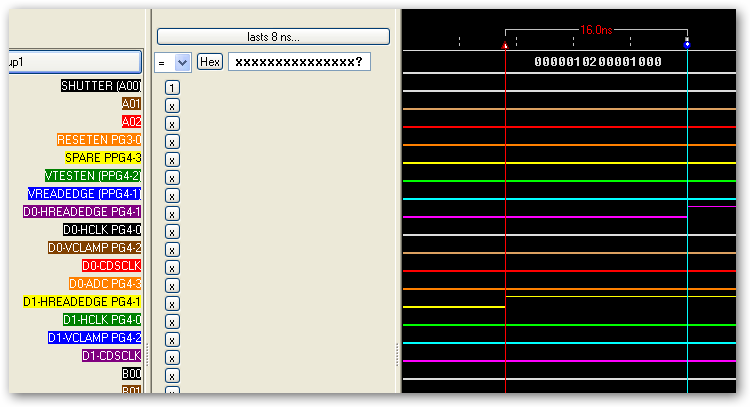

Measurement with logic analyser: 250Mhz

usec measured actual

0 9.08

1 10.09 1.01

10 19.09 10.01

100 109.10 100.02

1000 1.01ms 1000.92

3000 3.01ms 3000.92

Measurement with logic analyser: 25Mhz

usec measured actual

1000 1.01ms

2000 2.01ms 2.00

5000 5.01ms 5.00

10000 10.01ms 10.00

100000 100.02ms 100.01 (Logic analyer seeing the 1.0001 delay)

200000 200.03ms 200.02

3. Measuring CE_CMD_PARSEQ

Used t3_app(): t3 48 1 x

reps ce.n goLogic us_per_CMD_PARSEQ

0 12 9.05 .033

1 20 9.32 .030

5 52 10.27 .030

10 92 11.47 .030

11 100 11.71 .030

12 108 11.95 .031

20 172 13.86 .030

50 412 21.07 .030

1 CE_CMD_PARSEQ takes 30 ns.

8 CE_CMD_PARSEQ takes 8*30= 240 ns.

How about PARAM_VIDE_SEQ?

reps ce.n goLogic us_per_CMD_PARSEQ

0 4 9.07

10 164 13.87 .030

20 324 18.67 .030

So, CE_PARAM_VIDSEQ_PGx_x also take 30 ns.

PG3/PG4 program is: 8*30 + 8*30 = 480 ns.

5. CE_CMD_SHUTTEROPEN/CE_CMD_SHUTTERCLOS timing

open/close:

reps ce.n usec CMD

0 12 9.07

10 32 9.67 .030

50 112 12.07 .030

100 212 15.07 .030

CE_CMD_SHUTTEROPEN takes 30ns.

CE_CMD_SHUTTERCLOS takes 30ns.

5. Fillin' the fifo

ce.n usec. usec/n

20 3 .150

28 4 .148

52 7 .134

92 12 .130

172 22 .127

412 54 .131

Take CPU about 130ns to copy a command into the FIFO.

So a DIGOUT at 260ns takes 50% CPU?

6. ADC notes

Time for CD_PARAM_ADC

rep ns per_CE_CMD

0 30

1 60 30

5 180 30

10 330 30

ce_put(ce, CE_PARAM_ADC| adc) takes 30 ns.

7. CATP transfers rates

Using t9_app() I created data in the controller, then used grasp_get_image() to transfer to sgir.

transfer rates:

sg.x 2048 2048 2

2048x2048x32bits: 16MB

grasp_get_image() 16777216 bytes in 0.364 sec. 46.1 MB/s. 368.5 Mb/s

grasp_get_image() 16777216 bytes in 0.362 sec. 46.3 MB/s. 370.6 Mb/s

grasp_get_image() 16777216 bytes in 0.365 sec. 46.0 MB/s. 367.8 Mb/s

grasp_get_image() 16777216 bytes in 0.362 sec. 46.3 MB/s. 370.7 Mb/s

grasp_get_image() 16777216 bytes in 0.241 sec. 69.7 MB/s. 557.6 Mb/s

grasp_get_image() 16777216 bytes in 0.241 sec. 69.6 MB/s. 556.8 Mb/s

grasp_get_image() 16777216 bytes in 0.569 sec. 29.5 MB/s. 236.0 Mb/s

2048x1024x32bits: 8MB

grasp_get_image() 8388608 bytes in 0.118 sec. 70.9 MB/s. 567.1 Mb/s

grasp_get_image() 8388608 bytes in 0.119 sec. 70.4 MB/s. 563.4 Mb/s

grasp_get_image() 8388608 bytes in 0.122 sec. 68.9 MB/s. 551.1 Mb/s

grasp_get_image() 8388608 bytes in 0.119 sec. 70.5 MB/s. 563.6 Mb/s

1024x1024x32bits: 4MB

grasp_get_image() 4194304 bytes in 0.060 sec. 70.2 MB/s. 561.9 Mb/s

grasp_get_image() 4194304 bytes in 0.060 sec. 70.2 MB/s. 561.8 Mb/s

grasp_get_image() 4194304 bytes in 0.060 sec. 70.2 MB/s. 561.9 Mb/s

512x512x32bits:

grasp_get_image() 1048576 bytes in 0.016 sec. 67.1 MB/s. 537.2 Mb/s

grasp_get_image() 1048576 bytes in 0.016 sec. 67.2 MB/s. 537.9 Mb/s

grasp_get_image() 1048576 bytes in 0.016 sec. 67.1 MB/s. 536.5 Mb/s

Time to run t9_app in controller:

sg.x 2048 2048 0 = 0.171 sec

sg.x 2048 2048 1 = 1.184 sec // does division

sg.x 2048 2048 2 = 0.255 sec

Note: about 0.010 sec in the above time is used for catp memory allocation.

Hmm... 2Hz if we clock + sample + transfer?

maybe we should clock + add? Then transfer?

July 8, 2010: Re-do above tests with just timing

the grasp_get_image() - 1st time I included calloc() in the time for data transfers.

2048x2048x32bits: 16MB

grasp_get_image() 16777216 bytes in 0.238 sec. 70.4 MB/s. 563.3 Mb/s

grasp_get_image() 16777216 bytes in 0.238 sec. 70.5 MB/s. 563.9 Mb/s

grasp_get_image() 16777216 bytes in 0.238 sec. 70.5 MB/s. 563.7 Mb/s

grasp_get_image() 16777216 bytes in 0.238 sec. 70.5 MB/s. 564.3 Mb/s

grasp_get_image() 16777216 bytes in 0.238 sec. 70.5 MB/s. 564.0 Mb/s

2048x1024x32bits: 8MB

grasp_get_image() 8388608 bytes in 0.120 sec. 70.2 MB/s. 561.3 Mb/s

grasp_get_image() 8388608 bytes in 0.120 sec. 70.1 MB/s. 560.9 Mb/s

grasp_get_image() 8388608 bytes in 0.120 sec. 70.1 MB/s. 561.1 Mb/s

grasp_get_image() 8388608 bytes in 0.120 sec. 70.1 MB/s. 560.7 Mb/s

512x512x32bits: 1MB.

grasp_get_image() 1048576 bytes in 0.016 sec. 66.8 MB/s. 534.3 Mb/s

grasp_get_image() 1048576 bytes in 0.016 sec. 66.8 MB/s. 534.2 Mb/s

grasp_get_image() 1048576 bytes in 0.017 sec. 61.8 MB/s. 494.3 Mb/s

grasp_get_image() 1048576 bytes in 0.016 sec. 66.6 MB/s. 532.8 Mb/s

grasp_get_image() 1048576 bytes in 0.016 sec. 66.7 MB/s. 533.4 Mb/s

Aug 25, 2010: SG tells IRTF we must do streaming some benchmark while

calling sockserv_run in t9_app()

512x512x16bits: 0.5MB

grasp_get_image() 524288 bytes in 0.011 sec. 49.7 MB/s. 397.4 Mb/s

2048x2048x16bits: 8MB

grasp_get_image() 8388608 bytes in 0.159 sec. 52.7 MB/s. 421.9 Mb/s

sg.x 512 512 1:

0.289 ms to allocate pool.

6.787 ms to fill data.

sg.x 2048 2048 1:

4.448 ms to allocate pool.

107.058 ms to fill data.

Function to estimate catp pool seutp time based on number of pixel:

ns = 1.0576883951822917 * n + 11733;

for integer based math, use:

n = w*h;

usec = 12 + n/1000 + n/17000;

transfer in general take a hit (70MB/s - > 50MB/s).

Serial Fill & Transfer:

sg.x 2048 2048 0 8

0. e_usec1=4453 e_sec2=105059 e_sec3=333000

----

Rep 2/8. esec=0.243690

grasp_get_image() 8388608 bytes in 0.161 sec. 52.0 MB/s. 416.4 Mb/s

send to dv () 0.096 sec. 13654 13654 13654.0

2010-09-07 - Cross trigger measurement

Measured CE_CMD_NOTDONE1 command: 40 ns.

Measured CE_CMD_WAITDONE1 command: 40 ns.

Measured sync time of dev0&1 CE at 20 ns (measure on logic analysis 250Mhz on FPG header).

Without XTrigger, 2 consecutive ce_go() are about ~380ns apart.

2010-09-08 - t9_app and catp

Or why tony thinks it's better to co-add in RAM...

t9_app is uboot to that generate data in catp pools. It takes about

109 msec to run: 4.5 for catp setup + 105 for generating data.

Ave time to catp transfer after generating data: 0.191 sec or 43.91MB/s, 351.35MB/s.

With t9_app & 333 ms loop (3Hz, 32% busy): 0.211 sec or 39.75MB/s, 318.05MB/s.

Able to do 1000 reps OK.

With t9_app & 250 ms loop (4Hz, 43% busy): 0.232 sec or 36.15MB/s, 289.26MB/s.

Able to do 1000 reps OK.

With t9_app & 225 ms loop (4.4Hz, 48% busy): 0.220 sec or 38.13MB/s, 305.04MB/s.

Able to do 91 reps before failing.

With t9_app & 200 ms loop (5Hz, 54% busy): 0.230 sec or 36.47MB/s, 291.77MB/s.

Able to do 29 reps before failing.

Reference:

1009/sgx200

1009/sgx225

1009/sgx250

1009/sgx333

------------------------------------------------------------------------

Without XTrigger, 2 consecutive ce_go() are about ~380ns apart.

2010-09-08 - t9_app and catp

Or why tony thinks it's better to co-add in RAM...

t9_app is uboot to that generate data in catp pools. It takes about

109 msec to run: 4.5 for catp setup + 105 for generating data.

Ave time to catp transfer after generating data: 0.191 sec or 43.91MB/s, 351.35MB/s.

With t9_app & 333 ms loop (3Hz, 32% busy): 0.211 sec or 39.75MB/s, 318.05MB/s.

Able to do 1000 reps OK.

With t9_app & 250 ms loop (4Hz, 43% busy): 0.232 sec or 36.15MB/s, 289.26MB/s.

Able to do 1000 reps OK.

With t9_app & 225 ms loop (4.4Hz, 48% busy): 0.220 sec or 38.13MB/s, 305.04MB/s.

Able to do 91 reps before failing.

With t9_app & 200 ms loop (5Hz, 54% busy): 0.230 sec or 36.47MB/s, 291.77MB/s.

Able to do 29 reps before failing.

Reference:

1009/sgx200

1009/sgx225

1009/sgx250

1009/sgx333

------------------------------------------------------------------------

Without XTrigger, 2 consecutive ce_go() are about ~380ns apart.

2010-09-08 - t9_app and catp

Or why tony thinks it's better to co-add in RAM...

t9_app is uboot to that generate data in catp pools. It takes about

109 msec to run: 4.5 for catp setup + 105 for generating data.

Ave time to catp transfer after generating data: 0.191 sec or 43.91MB/s, 351.35MB/s.

With t9_app & 333 ms loop (3Hz, 32% busy): 0.211 sec or 39.75MB/s, 318.05MB/s.

Able to do 1000 reps OK.

With t9_app & 250 ms loop (4Hz, 43% busy): 0.232 sec or 36.15MB/s, 289.26MB/s.

Able to do 1000 reps OK.

With t9_app & 225 ms loop (4.4Hz, 48% busy): 0.220 sec or 38.13MB/s, 305.04MB/s.

Able to do 91 reps before failing.

With t9_app & 200 ms loop (5Hz, 54% busy): 0.230 sec or 36.47MB/s, 291.77MB/s.

Able to do 29 reps before failing.

Reference:

1009/sgx200

1009/sgx225

1009/sgx250

1009/sgx333

------------------------------------------------------------------------

Without XTrigger, 2 consecutive ce_go() are about ~380ns apart.

2010-09-08 - t9_app and catp

Or why tony thinks it's better to co-add in RAM...

t9_app is uboot to that generate data in catp pools. It takes about

109 msec to run: 4.5 for catp setup + 105 for generating data.

Ave time to catp transfer after generating data: 0.191 sec or 43.91MB/s, 351.35MB/s.

With t9_app & 333 ms loop (3Hz, 32% busy): 0.211 sec or 39.75MB/s, 318.05MB/s.

Able to do 1000 reps OK.

With t9_app & 250 ms loop (4Hz, 43% busy): 0.232 sec or 36.15MB/s, 289.26MB/s.

Able to do 1000 reps OK.

With t9_app & 225 ms loop (4.4Hz, 48% busy): 0.220 sec or 38.13MB/s, 305.04MB/s.

Able to do 91 reps before failing.

With t9_app & 200 ms loop (5Hz, 54% busy): 0.230 sec or 36.47MB/s, 291.77MB/s.

Able to do 29 reps before failing.

Reference:

1009/sgx200

1009/sgx225

1009/sgx250

1009/sgx333

------------------------------------------------------------------------